Getting My Seo Companies Markham To Work

Wiki Article

Not known Details About Seo Companies Markham

Table of ContentsEverything about Seo Companies MarkhamSome Known Details About Seo Companies Markham Seo Companies Markham Things To Know Before You BuySeo Companies Markham Can Be Fun For AnyoneSeo Companies Markham Things To Know Before You Buy

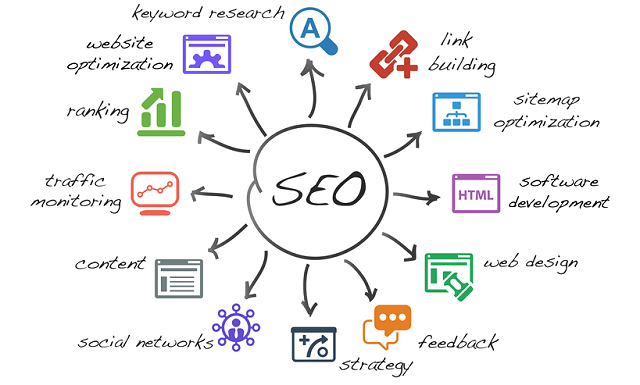

As an Internet marketing strategy, SEO considers how search engines work, the computer-programmed algorithms that dictate search engine behavior, what people search for, the actual search terms or keywords typed into search engines, and which search engines are preferred by their targeted audience. SEO is performed because a website will receive more visitors from a search engine when websites rank higher on the search engine results page (SERP).Webmasters and content providers began optimizing websites for search engines in the mid-1990s, as the first search engines were cataloging the early Web. Initially, all webmasters only needed to submit the address of a page, or URL, to the various engines, which would send a web crawler to crawl that page, extract links to other pages from it, and return information found on the page to be indexed.

A second program, known as an indexer, extracts information about the page, such as the words it contains, where they are located, and any weight for specific words, as well as all links the page contains. All of this information is then placed into a scheduler for crawling at a later date.

Our Seo Companies Markham PDFs

Using metadata to index pages was found to be less than reliable, however, because the webmaster's choice of keywords in the meta tag could potentially be an inaccurate representation of the site's actual content. Flawed data in meta tags, such as those that were inaccurate or incomplete, created the potential for pages to be mischaracterized in irrelevant searches.[ ] Web content providers also manipulated some attributes within the HTML (seo companies markham) source of a page in an attempt to rank well in search engines

In 2005, the reported on a company, Traffic Power, which allegedly used high-risk techniques and failed to disclose those risks to its clients. magazine reported that the same company sued blogger and SEO Aaron Wall for writing about the ban. Google's Matt Cutts later confirmed that Google did in fact ban Traffic Power and some of its clients.

In effect, this means that some links are stronger than others, as a higher Page, Rank page is more likely to be reached by the random web surfer. Page and Brin founded Google in 1998. Google attracted a loyal following among the growing number of Internet users, who liked its simple design.

Not known Incorrect Statements About Seo Companies Markham

The leading search engines, Google, Bing, and Yahoo, do not disclose the algorithms they use to rank pages. Some SEO practitioners have studied different approaches to search engine optimization and have shared their personal opinions. Patents related to search engines can provide information to better understand search engines. In 2005, Google began personalizing search results for each user.In 2007, Google announced a campaign against paid links that transfer Page, Rank. On June 15, 2009, Google disclosed that they had taken measures to mitigate the effects of Page, Rank sculpting by use of the nofollow attribute on links. Matt Cutts, a well-known software engineer at Google, announced that Google Bot would no longer treat any no follow links, in the same way, to prevent SEO service providers from using nofollow for Page, Rank sculpting.

The 2012 Google Penguin attempted to penalize websites that used manipulative techniques to improve their rankings on the search engine. Although Google Penguin has been presented as an algorithm aimed at fighting web spam, it really focuses on spammy links by gauging the quality of the sites the links are coming from.

Some Of Seo Companies Markham

Bidirectional Encoder Representations from Transformers (BERT) was another attempt by Google to improve their natural language processing, but this time in order to better understand the search queries of their users. In terms of search engine optimization, BERT intended to connect users more easily to relevant content and more info here increase the quality of traffic coming to websites that are ranking in the Search Engine Results Page.

Yahoo! formerly operated a paid submission service that guaranteed to crawl for a cost per click; however, this practice was discontinued in 2009. Search engine crawlers may look at a number of different factors when crawling a site. Not every page is indexed by search engines. The distance of pages from the root directory of a site may also be a factor in whether or not pages get crawled.

Not known Facts About Seo Companies Markham

In December 2019, Google began updating the User-Agent string of their crawler to reflect the latest Chrome version used by their rendering service. The delay was to allow webmasters time to update their code that responded to particular bot User-Agent strings. seo companies markham. Google ran evaluations and felt confident the impact would be minor

Pages typically prevented from being crawled include login-specific pages such as shopping carts and user-specific content such as search results from internal searches. In March 2007, Google warned webmasters that they should prevent indexing of internal search results because those pages are considered search spam. In 2020, Google sunsetted the standard (and open-sourced their code) and now treats it as a hint not a directive.

Report this wiki page